This month AMD will finally release their first entry-level RDNA2-based gaming product, the Radeon RX 6500 XT. This new GPU is set to come in at a $200 MSRP, though of course we expect it to cost more than that beyond an initial limited run, which may hit close to the MSRP. In reality, the 6500 XT is probably going to end up priced between $300 to $400 at retail, but we’ll have to wait and see on that one.

It’s been widely reported that the 6500 XT is restricted to PCI Express 4.0 x4 bandwidth and although AMD hasn’t made that public yet, and we’re bound by an NDA, this was already confirmed by Asrock, so it’s no longer a secret. But what might this mean for the Radeon RX 6500 XT? Opinions are divided on this one. Some of you believe this will cripple the card, while others point to PCI Express bandwidth tests using flagship graphics cards which suggest the 6500 XT will be fine, even in a PCI Express 3.0 system.

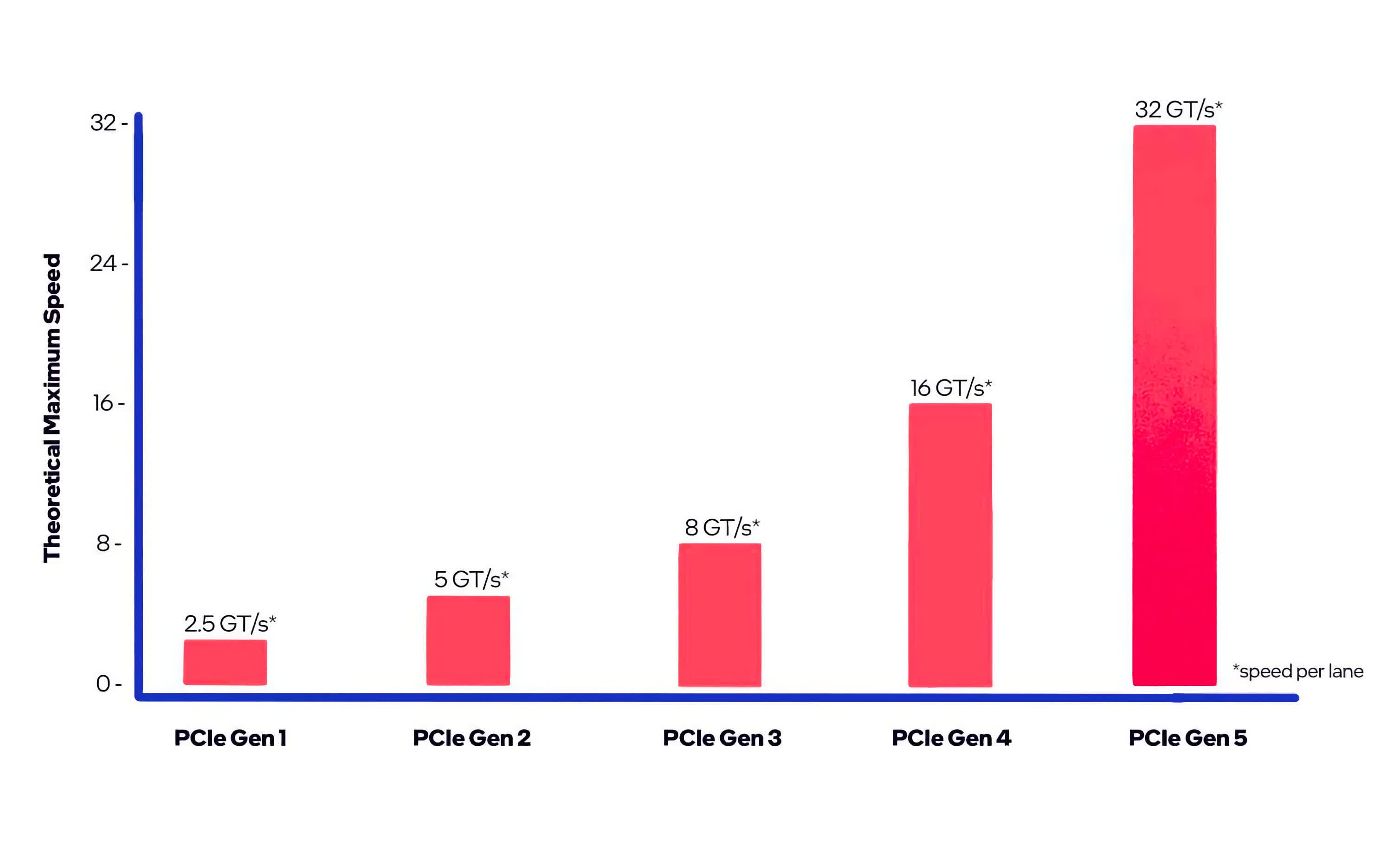

With PCIe 4.0 you get roughly 2 GB/s of bandwidth per lane, giving the 6500 XT a ~8 GB/s communication link with the CPU and system memory. But if you install it in a PCIe 3.0 system that figure is halved, and this is where you could start to run into problems.

| Unidirectional Bandwidth: PCIe 3.0 vs. PCIe 4.0 | ||||

| PCIe Generation | x1 | x4 | x8 | x16 |

| PCIe 3.0 | 1 GB/s | 4 GB/s | 8 GB/s | 16 GB/s |

| PCIe 4.0 | 2 GB/s | 8 GB/s | 16 GB/s | 32 GB/s |

The folks over at TechPowerUp have tested an RTX 3080 with average frame rate performance at 1080p only dropping ~10% when limited to 4 GB/s of PCIe bandwidth. With that being a significantly more powerful GPU, many have assumed the 6500 XT will be just fine. The problem with that assumption is that you’re ignoring that the RTX 3080 has a 10GB VRAM buffer, while the 6500 XT only has a 4GB VRAM buffer. The smaller the memory buffer, the more likely you are to dip into system memory, and this is where the limited PCIe bandwidth can play havoc.

The smaller the memory buffer, the more likely you are to dip into system memory, and this is where the limited PCIe bandwidth can play havoc.

Of course, the RTX 3080 was tested using ultra quality settings whereas the 6500 XT is more suited to dialed down presets, such as ‘medium’, for example. AMD themselves would argue that the PCIe 3.0 bandwidth won’t be an issue for the 6500 XT as gamers should ensure they’re not exceeding the memory buffer for optimal performance, but with a 4GB graphics card in modern games that’s very difficult.

We’ll discuss more about that towards the end of this review, but for now let’s explain what we’re doing here. Although 6500 XT reviews are only days away, we decided not to wait. Initially our idea was to investigate PCIe performance with a similar spec product for our internal reference, but the results were so interesting that we decided to make a full feature out of it.

| PCI Express: Unidirectional Bandwidth in x1 and x16 Configurations | ||||

| Generation | Year of Release | Data Transfer Rate | Bandwidth x1 | Bandwidth x16 |

| PCIe 1.0 | 2003 | 2.5 GT/s | 250 MB/s | 4.0 GB/s |

| PCIe 2.0 | 2007 | 5.0 GT/s | 500 MB/s | 8.0 GB/s |

| PCIe 3.0 | 2010 | 8.0 GT/s | 1 GB/s | 16 GB/s |

| PCIe 4.0 | 2017 | 16 GT/s | 2 GB/s | 32 GB/s |

| PCIe 5.0 | 2019 | 32 GT/s | 4 GB/s | 64 GB/s |

| PCIe 6.0 | 2021 | 64 GT/s | 8 GB/s | 128 GB/s |

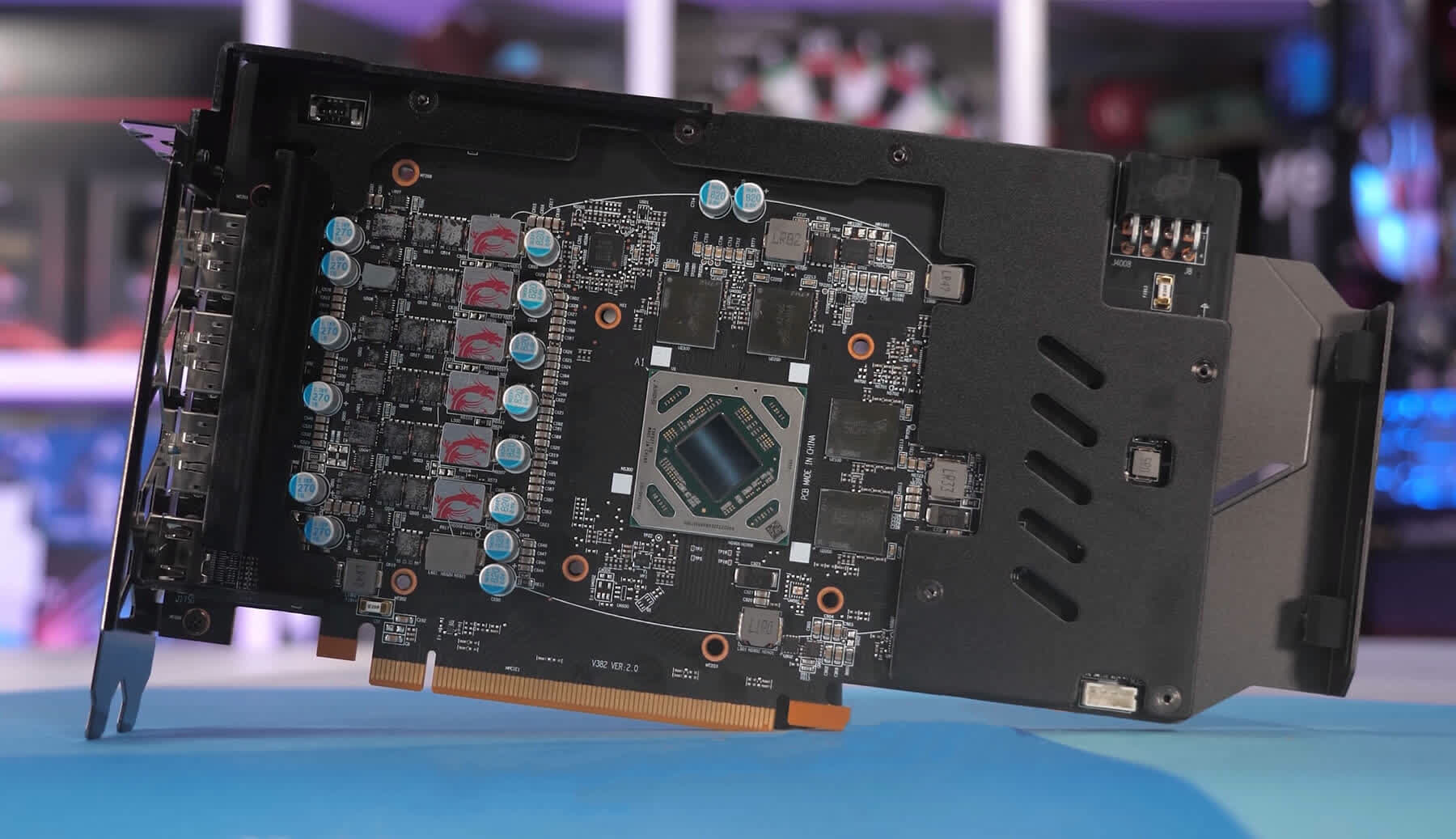

To gather some insight into what this could mean for the 6500 XT, we took the 5500 XT and benchmarked several configurations. First, I tested both the 4GB and 8GB versions using their stock PCIe 4.0 x8 configuration, then repeated the test with PCIe 4.0 x4. This is the same configuration the 6500 XT uses, and then again with PCIe 3.0 x4.

We’ve run these in a dozen games at 1080p and 1440p and for the more modern titles we’ve gone with the medium quality preset, which is a more realistic setting for this class of product. We’ll go over the data for most of the games tested and then we’ll do some side by side comparisons. Testing was performed in our Ryzen 9 5950X test system, changing the PCIe mode in the BIOS.

Given the 6500 XT and 5500 XT are expected to be fairly close in terms of performance based on benchmark numbers released by AMD, using the 5500 XT to simulate the potential PCIe issues of the 6500 XT should be fairly accurate. We’ll make the disclaimer that the 6500 XT is based on more modern RDNA2 architecture and this could help alleviate some of the PCIe bandwidth issues, though I’m not expecting that to be the case, we’ll keep the architectural difference in mind.

Benchmarks

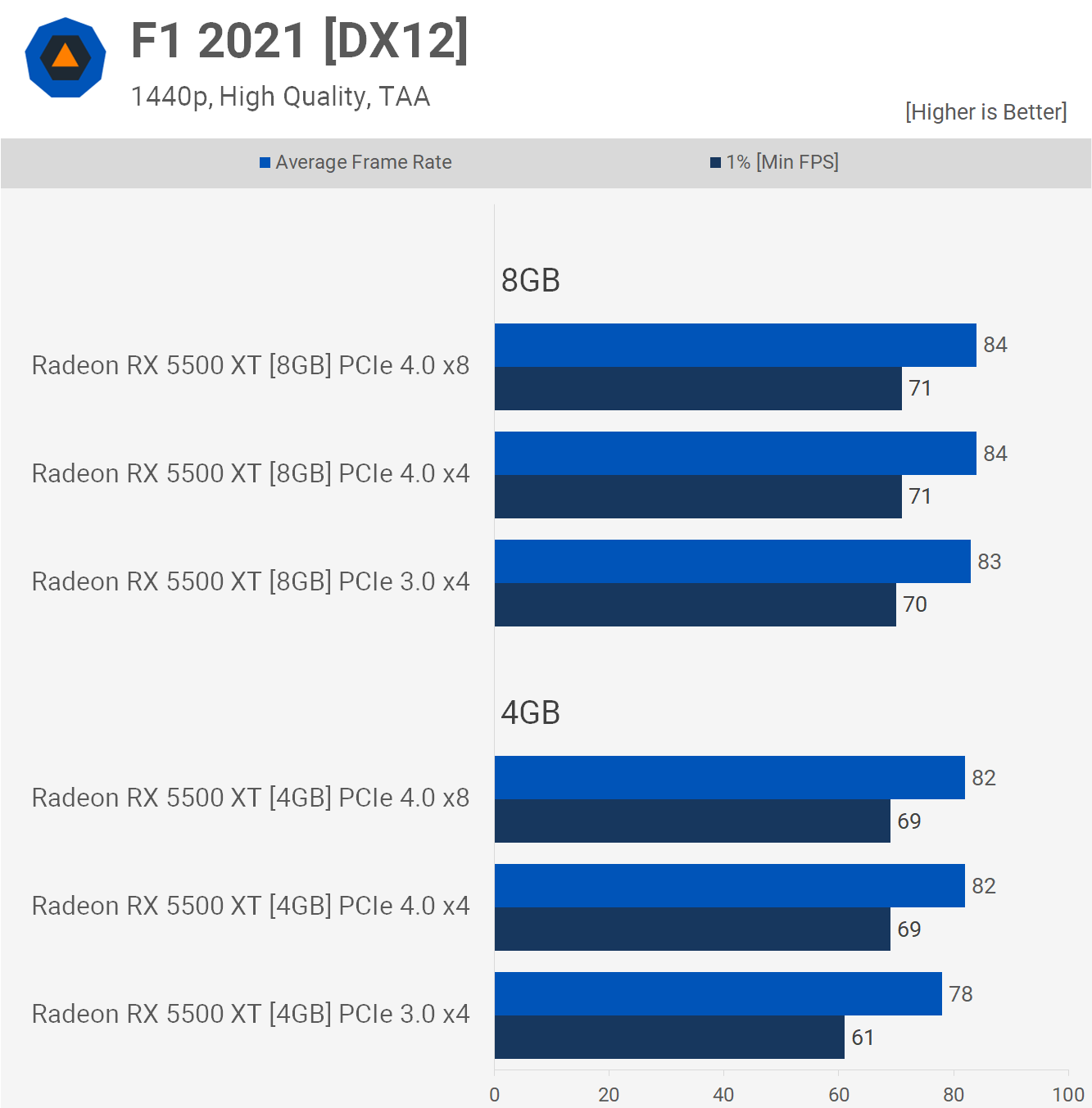

Starting with F1 2021, we see that limiting the PCIe bandwidth with the 8GB 5500 XT has little to no impact on performance. Then for the 4GB model we are seeing a 9% reduction in 1% low performance at a 6% hit to the average frame rate when comparing the stock PCIe 4.0 x8 configuration of the 5500 XT to PCIe 3.0 x4.

That’s not a massive performance hit, but it’s still a reasonable drop for a product that’s not all that powerful to begin with, though it does perform well in F1 2021 using the high quality preset.

Jumping up to 1440p we see no real performance loss with the 8GB model, whereas the 4GB version drops ~12% of its original performance. This isn’t a significant loss in the grand scheme of things and the game was perfectly playable, but for a card that’s not exactly packing oodles of compute power, a double-digit performance hit will likely raise an eyebrow.

Things get much much worse in Shadow of the Tomb Raider. A couple of things to note here… although we’re using the highest quality preset for this game, it was released back in 2018 and with sufficient PCI Express bandwidth, the 5500 XT can easily drive 60 fps on average, resulting in an enjoyable and very playable experience.

We see that PCIe bandwidth is far less of an issue for the 8GB model and that’s because the game does allocate up to 7 GB of VRAM using these quality settings at 1080p.

The 4GB 5500 XT plays just fine using its stock PCIe 4.0 x8 configuration, there were no crazy lag spikes, the game was very playable and enjoyable under these conditions. Even when limited to PCIe 4.0 x4 bandwidth, we did see a 6% drop in performance, though overall the gameplay was similar to the x8 configuration. If we then change to the PCIe 3.0 spec, performance tanks and while still technically playable, frame suffering becomes prevalent and the overall experience is quite horrible.

We’re talking about a 43% drop in 1% low performance for the 4GB model when comparing PCIe 4.0 operation to 3.0, which is a shocking performance reduction.

You could argue that we’re exceeding the VRAM buffer here, so it’s not a realistic test, but you’ll have a hard time convincing me of that, given how well the game played using PCIe 4.0 x8.

As you’d expect, jumping up to 1440p didn’t help and we’re still looking at a 43% hit to the 1% lows. When using PCI Express 4.0, the 4GB model was still able to deliver playable performance, while PCIe 3.0 crippled performance to the point where the game is simply not playable.

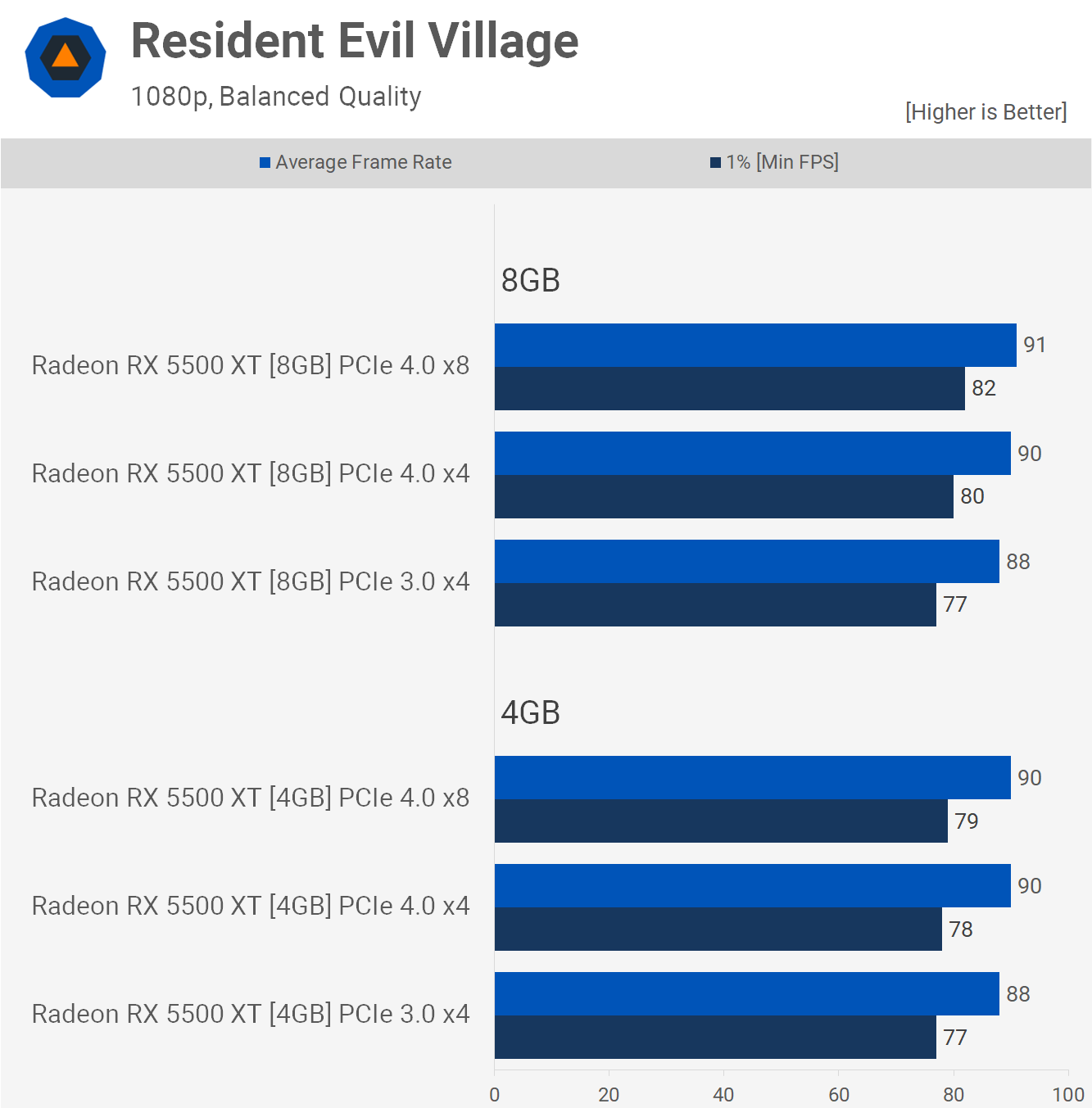

Resident Evil Village only requires 3.4 GB of VRAM in our test, so this is a good example of how these cards perform when kept within the memory buffer. We’re using the heavily dialed down ‘balanced’ quality preset, so those targeting 60 fps on average for these single player games will have some headroom to crank up the quality settings, though as we’ve seen you’ll run into performance related issues much sooner when using PCIe 3.0 with a x4 card.

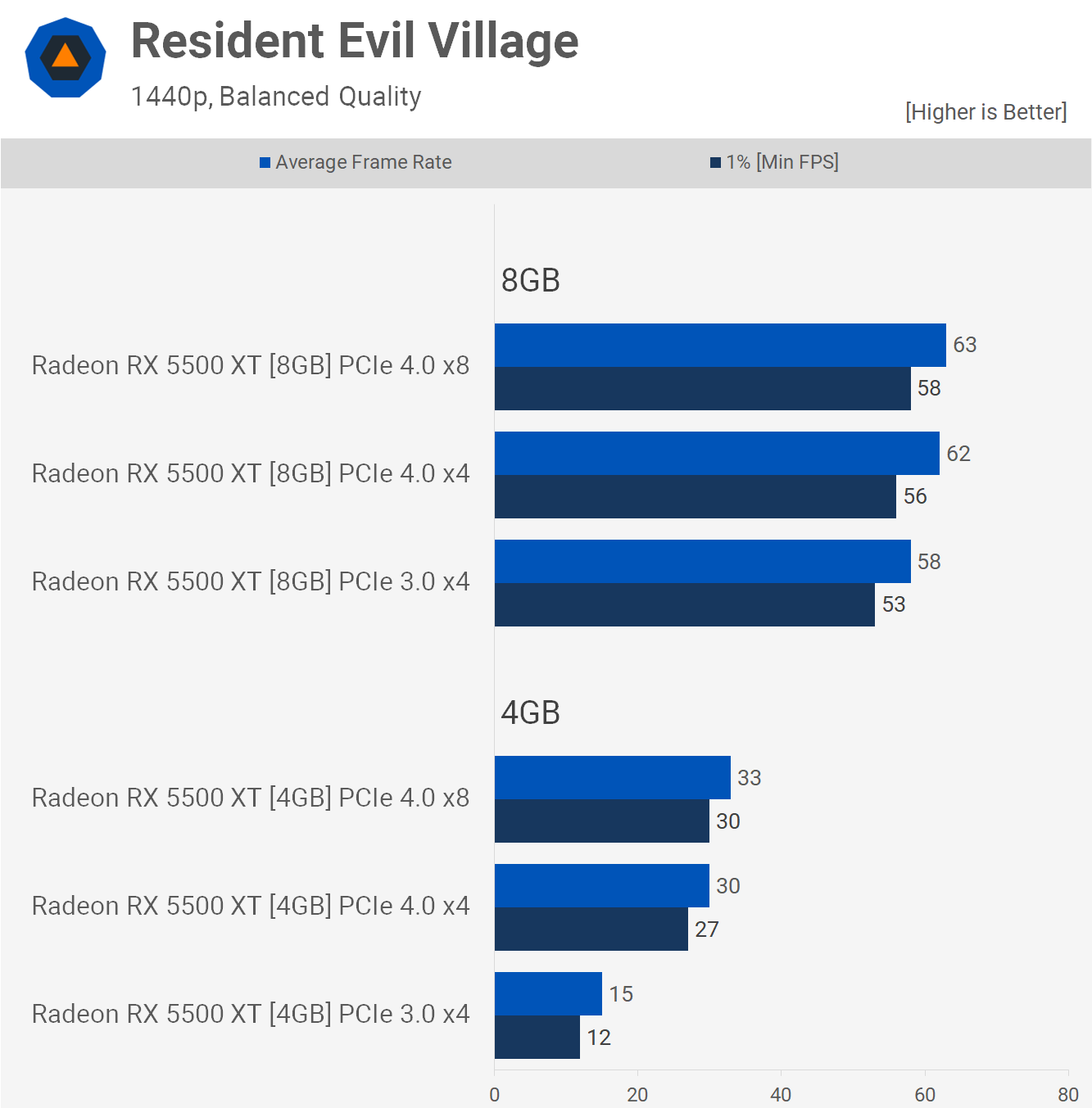

Speaking of which, we have a great example of that at 1440p which in our test pushed memory allocation up to 4.8 GB with usage around 4 GB. PCI Express bandwidth aside, the 4GB buffer alone crippled the 5500 XT here, and reducing the bandwidth to x4 destroys performance to the point where the card can no longer be used.

Rainbow Six Siege is another example of why heavily limiting PCI Express bandwidth of cards with smaller VRAM buffers is a bad idea. The 4GB 5500 XT is already up to 27% slower than the 8GB version, with the only difference between the two models being VRAM capacity.

But we see that limiting the PCIe bandwidth has a seriously negative impact on performance of the 4GB model. Halving the bandwidth from x8 to x4 in the 4.0 mode drops the 1% low by 21%. This is particularly interesting as it could mean even when used in PCIe 4.0 systems, the 6500 XT is still haemorrhaging performance due to the x4 bandwidth.

But it gets much worse for those of you with PCIe 3.0 systems, which at this point in time is most, particularly those seeking a budget GPU. Here we’re looking at a 52% drop in performance from the 4.0 x8 configuration to 3.0 x4. Worse still, 1% lows are not below 60 fps and while this could be solved by reducing the quality settings, the game was perfectly playable even with 4GB of VRAM when using the PCIe 4.0 x8 mode.

As you’d expect, it’s more of the same at 1440p, we’re looking at 1% lows that are slashed in half on the 4GB card when using PCI Express 3.0.

Moving on to Cyberpunk 2077, we tested using the medium quality preset with medium quality textures. This game is very demanding even using these settings, but with the full PCIe 4.0 x8 mode the 4GB 5500 XT was able to deliver playable performance with an average of 49 fps at 1080p. But when reducing the bus bandwidth with PCIe 3.0 x4, performance tanked by 24% and now the game is barely playable.

The 1440p data isn’t that relevant as you can’t really play Cyberpunk 2077 with a 5500 XT at this resolution using the medium quality settings, but here’s the data anyway.

We tested Watch Dogs: Legion using the medium quality preset and although the 4GB model is slower than the 8GB version as the game requires 4.5 GB of memory in our test using the medium quality preset, performance was still decent when using the standard PCIe configuration with 66 fps on average. Despite the fact that we must be dipping into system memory, the game played just fine.

However, reducing the PCIe bandwidth had a significant influence on performance and we see that PCIe 4.0 x4 dropped performance by 24% with PCIe 3.0 x4, destroying it by a 42% margin.

We’ve heard reports that the upcoming 6500 XT is all over the place in terms of performance, and the limited 4GB buffer along with the gimped PCIe 4.0 x4 bandwidth is 100% the reason why and we can see an example of that here at 1080p with the 5500 XT.

The PCIe 3.0 x4 mode actually looks better at 1440p relative to the 4.0 spec as the PCIe bandwidth bottleneck is less severe than the compute bottleneck at this resolution. Still, we’re talking about an unnecessary 36% hit to performance.

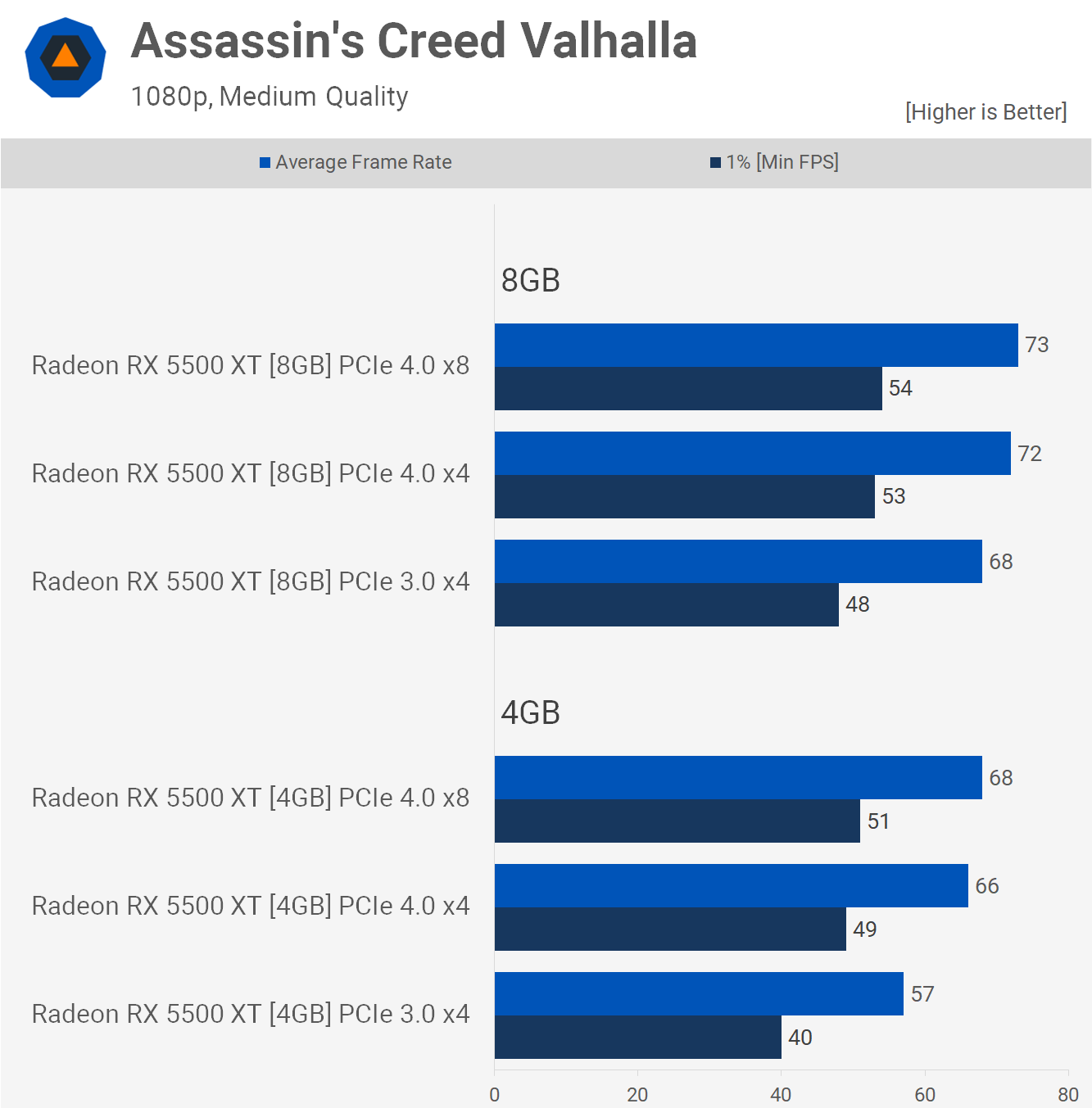

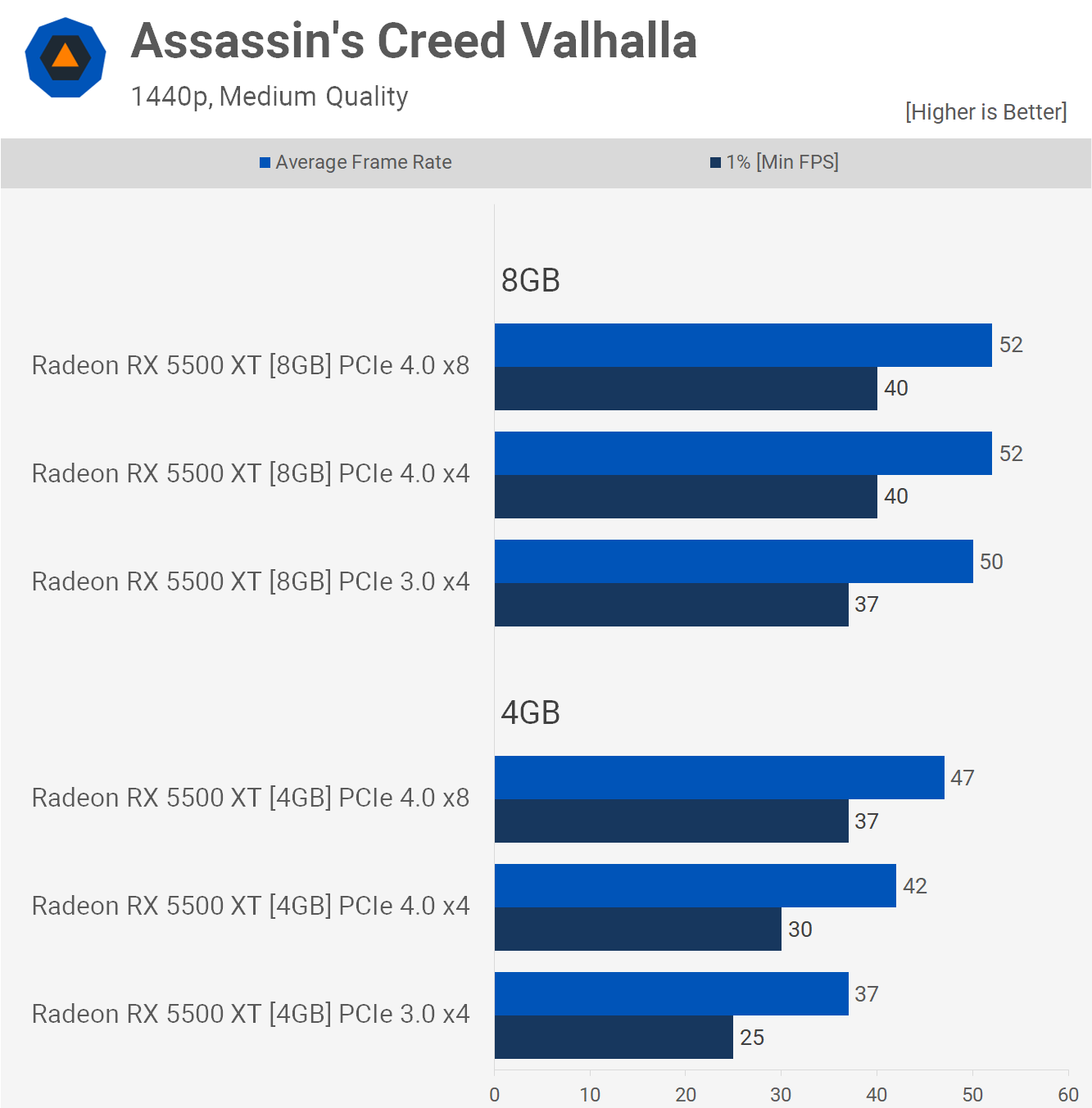

Assassin’s Creed Valhalla has been tested using the medium quality preset and we do see an 11% hit to performance for the 8GB model when using PCIe 3.0 x4, so that’s interesting as the game only required up to 4.2 GB in our test at 1080p.

That being the case, the 4GB model suffered more, dropping 1% lows by 22% from 51 fps to just 40 fps. The game was still playable, but that’s a massive performance hit to an already low-end graphics card.

The margins continued to grow at 1440p and now the PCIe 3.0 x4 configuration for the 4GB model was 32% slower than what we saw when using PCIe 4.0 x8. Obviously, that’s a huge margin, but it’s more than just numbers on a graph. The difference between these two was remarkable when playing the game, like we were comparing two very different tiers of product.

Far Cry 6, like Watch Dogs: Legion, is an interesting case study. Here we have a game that uses 7.2 GB of VRAM in our test at 1080p, using a dialed down medium quality preset. But what’s really interesting is that the 4GB and 8GB versions of the 5500 XT delivered virtually the same level of performance when fed at least x8 bandwidth in the PCIe 4.0 mode, which is the default configuration for these models.

Despite exceeding the VRAM buffer, at least that’s what’s being reported to us, the 4GB 5500 XT makes out just fine in the PCIe 4.0 x8 mode. However, limit it to PCIe 4.0 x4 and performance drops by as much as 26% — and again, remember the 6500 XT uses PCIe 4.0 x4. That means right away the upcoming 6500 XT is likely going to be heavily limited by PCIe memory bandwidth under these test conditions, even in a PCI Express 4.0 system.

But it gets far worse. If you use PCIe 3.0, we’re looking at a 54% decline for the average frame rate. Or another way to put it, the 4GB 5500 XT was 118% faster using PCIe 4.0 x8 compared to PCIe 3.0 x4, yikes.

Bizarrely, the 4GB 5500 XT still worked at 1440p with the full PCIe 4.0 x8 bandwidth but was completely broken when dropping below that. I would have expected no matter how much PCIe bandwidth you fed it here performance was still going to be horrible, but apparently not.

Using the ‘favor quality’ preset, Horizon Zero Dawn required 6.4 GB of VRAM at 1080p. Interestingly, despite not exceeding the VRAM buffer of the 8GB model we still saw an 11% decline in performance when forcing PCIe 3.0 x4 operation. Then with the 4GB model that margin effectively doubled to 23%. It’s worth noting that both PCIe 4.0 configurations roughly matched the performance of the 8GB model, so it was PCIe 3.0 where things get dicey once again.

The 1440p results are similar though here we’re more compute limited. Even so, reducing the PCIe bandwidth negatively impacted performance for both the 4GB and 8GB versions of the 5500 XT.

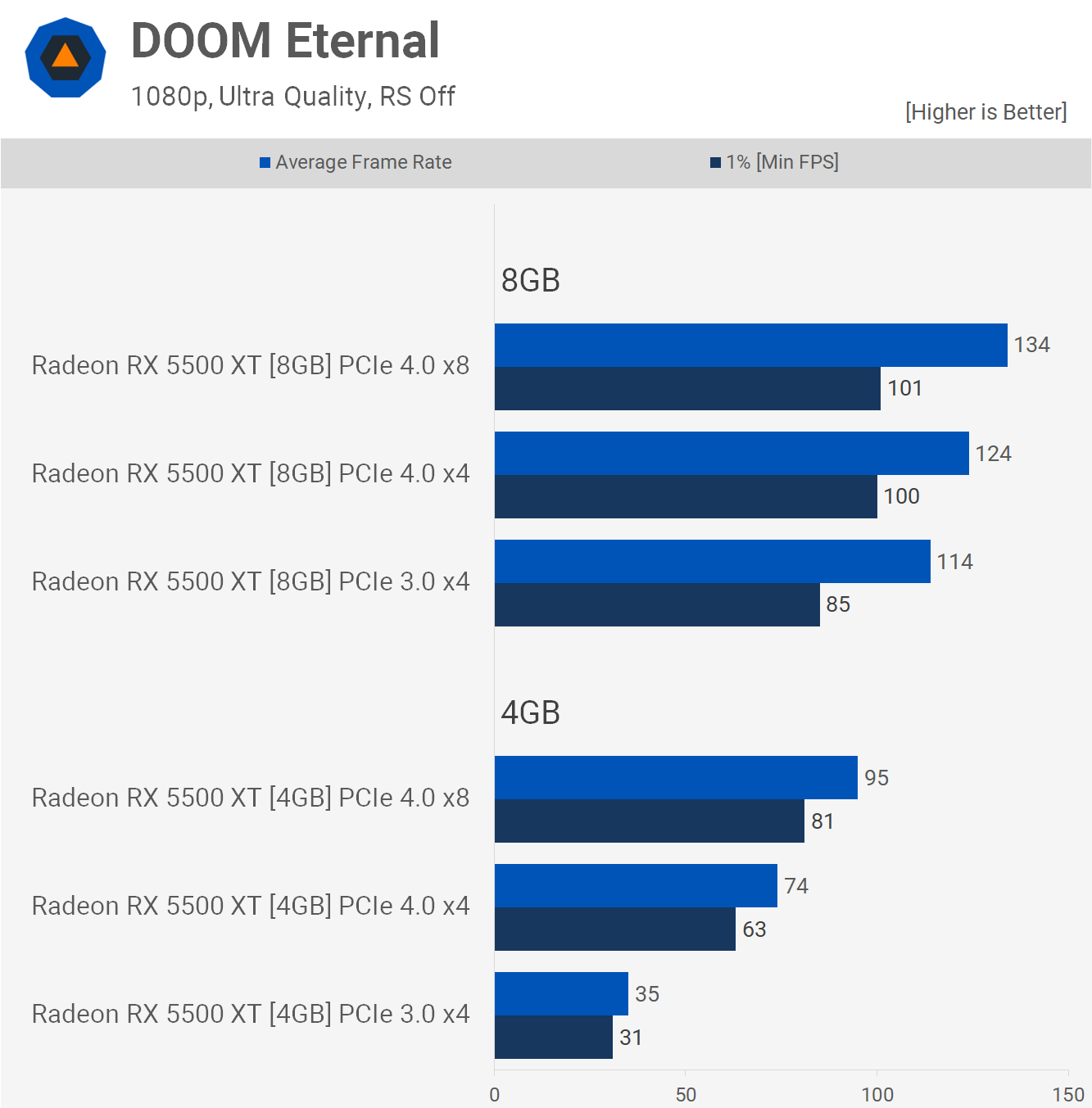

Doom Eternal is another interesting game to test with as this one tries to avoid exceeding the memory buffer by limiting the level of quality settings you can use. Here we’ve used the ultra quality preset for both models, but for the 4GB version we have to reduce texture quality from ultra to medium before the game would allow us to apply the preset.

At 1080p with the ultra quality preset and ultra textures the game uses up to 5.6 GB of VRAM in our test scene. Dropping the texture pool size to ‘medium’ reduced that figure to 4.1 GB. So the 8GB 5500 XT sees VRAM usage hit 5.6 GB in this test, while the 4GB model maxes out, as the game would use 4.1 GB if available.

Despite tweaking the settings, the 4GB 5500 XT is still 29% slower than the 8GB version when using PCIe 4.0 x8. Interestingly, reducing PCIe bandwidth for the 8GB model still heavily reduced performance, dropping 1% lows by as much as 16%.

But it was the 4GB version where things went really wrong. The reduction in PCIe bandwidth from 4.0 x8 to 4.0 x4 hurt performance by 22%. Then switching to 3.0 destroyed it making the game virtually unplayable with a 35 fps average.

The margins grew slightly at 1440p, but the results were much the same overall. If we assume the 6500 XT is going to behave in a similar fashion to the 5500 XT, that means at 1440p it will end up much worse off than parts like the 8GB 5500 XT and completely crippled in PCIe 3.0 systems.

Average Frame Rates

Here’s a breakdown of all 12 games tested. We skipped over Hitman 3 and Death Stranding as the results weren’t interesting and didn’t want to drag this one too long.

Here we’re comparing the average frame rate of the 4GB 5500 XT when using PCIe 4.0 x8, which is the default configuration for that model to PCIe 3.0 x4. On average we’re looking at a massive 49% increase in performance for PCIe 4.0 x8, with gains as large as 171% seen in Doom. Best case was Resident Evil Village, which saw basically no difference, but that was a one off in our testing.

Even F1 2021 saw a 6% reduction, but that’s a best case result. Beyond that we’re looking at double-digit gains with well over half the games seeing gains larger than 20%, and remember we’re using medium quality presets for the most part.

Now if we normalize the X axis and switch to the 8GB model, here’s how small the performance variation is there when comparing PCIe 4.0 x8 to PCIe 3.0 x4. We’re still seeing some reasonably large performance gains due to the extra bandwidth, but overall the larger VRAM buffer has helped reduce inconsistencies, resulting in just an 8% improvement on average.

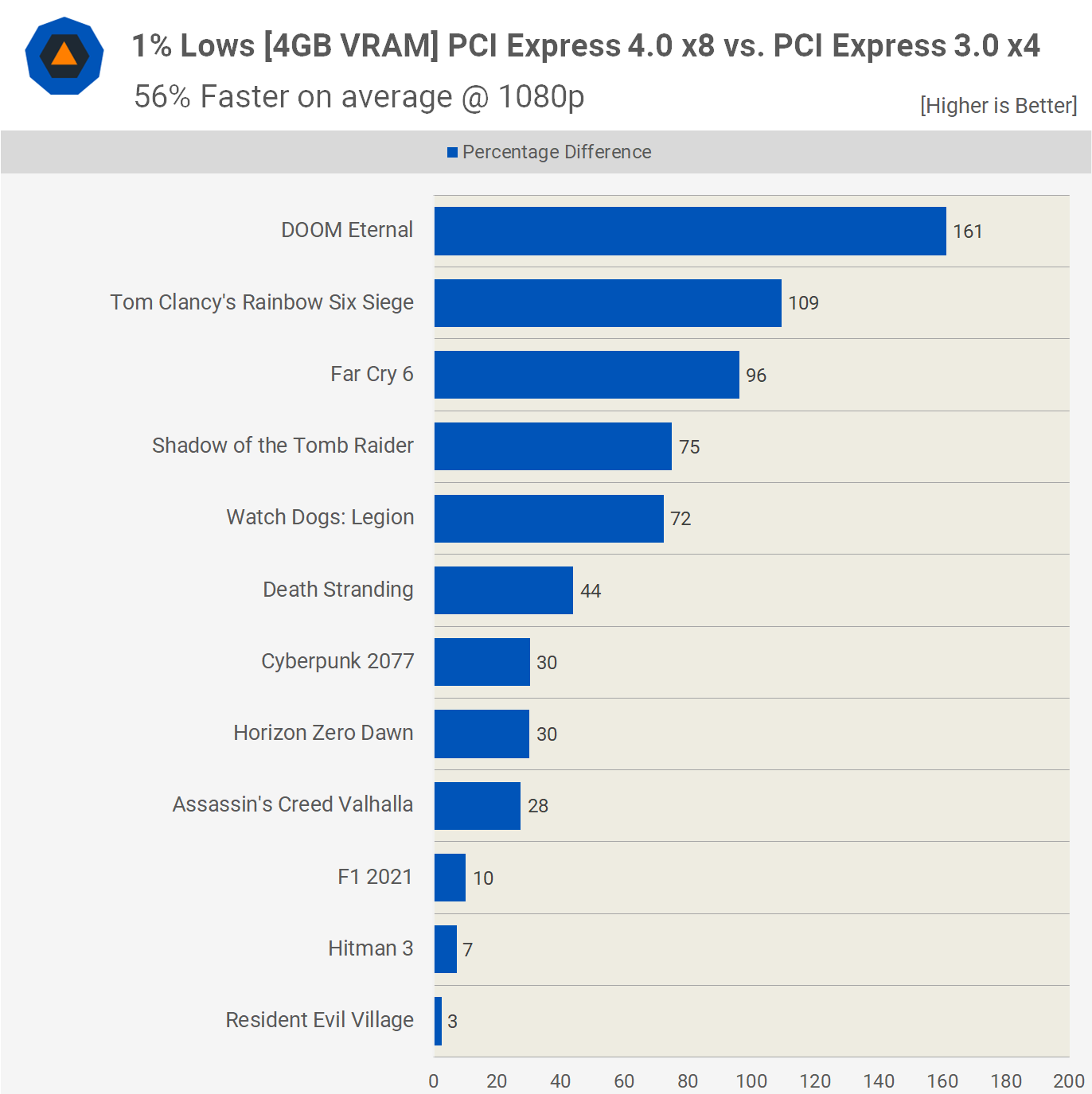

For those of you interested in the 1% low data, here’s a quick look at that. Comparing 1% lows sees the margin for the 4GB model blow out to 56% with most games seeing at least a 30% margin.

Then if we look at the 8GB model the performance overall is significantly more consistent. We’re looking at an 8% increase on average for the PCIe 4.0 x8 configuration when compared to PCIe 3.0 x4.

What We Learned

That was an interesting test, with a number of very telling results. Titles such as Watch Dogs: Legion and Far Cry 6 were particularly intriguing, because despite exceeding the 4GB buffer, the 4GB version of the 5500 XT performed very close to the 8GB model when given the full PCIe 4.0 x8 bandwidth that those GPUs support.

However, limiting the 4GB model to even PCIe 4.0 x4 heavily reduced performance, suggesting that out of the box the 6500 XT could be in many instances limited primarily by the PCIe connection, which is pretty shocking. It also strongly suggests that installing the 6500 XT into a system that only supports PCI Express 3.0 could in many instances be devastating to performance.

At this point we feel all reviewers should be mindful of this and make sure to test the 6500 XT in PCIe 3.0 mode. There’s no excuse not to do this as you can simply toggle between 3.0 and 4.0 in the BIOS. Of course, AMD is hoping reviewers overlook this and with most now testing on PCIe 4.0 systems, the 6500 XT might end up looking a lot better than it’s really going to be for users.

It’s well worth noting that the vast majority of gamers are limited to PCI Express 3.0. Intel systems, for example, only started supporting PCIe 4.0 with 11th-gen processors when using a 500 series motherboard, while AMD started supporting PCIe 4.0 with select Ryzen 3000 processors which required an X570 or B550 motherboard.

So, for example, if you have a AMD B450 motherboard you’re limited to PCIe 3.0. Furthermore, AMD’s latest budget processors such as the Ryzen 5 5600G are limited to PCIe 3.0, regardless of the motherboard used. In other words, anyone who has purchased a budget CPU to date, with the exception of the new Alder Lake parts, will be limited to PCI Express 3.0.

Now, you could argue that in games like F1 2021 and Resident Evil Village where we kept VRAM usage well under 4GB, that the 4GB 5500 XT was just fine, even with PCIe 3.0 x4 bandwidth. It’s true under those conditions the performance hit should be little to nothing, but the problem is ensuring that VRAM usage is well below 4 GB in current titles is going to be difficult, and in many instances not even possible.

Even if you’re in the know and can monitor this stuff, unless you’re aiming for 3GB or less, it’s hard to know just how close to the edge you are, unless you have an 8GB graphics card on hand to test that. And this is the problem, the 4GB 5500 XT was always right on a knife edge and often went too far. With the full PCIe 4.0 x8 bandwidth, it usually got away without too much of a performance hit, but with PCIe 3.0 x4 it almost always ran into trouble, and in extreme cases wasn’t able to manage playable performance.

But this isn’t about the extreme cases, where we dropped to unplayable performance, it’s about the card being noticeably slower when using PCIe 3.0 x4 bandwidth. In the case of the 5500 XT, we went from 82 fps on average at 1080p in the 12 games tested to just 57 fps, that’s a huge 30% decline in performance.

What’s more crazy is that when using the full PCIe 4.0 x8 bandwidth, the 4GB 5500 XT was 26% faster than the 4GB RX 570, but when limited to PCIe 3.0 x4 it ended up slower than the old RX 570 by a 12% margin. So we’ll say that again, the 5500 XT was 12% slower than the RX 570 when both are using PCI Express 3.0, but the 5500 XT was limited to 4 lanes whereas the 570 used all 16.

The Radeon RX 6500 XT is likely to face the same problem, but as we mentioned in the introduction, it’s based on a different architecture, so maybe that will help. But if we see no change in behavior, the 6500 XT is going to end up being a disaster for PCIe 3.0 users and at best a mixed bag using PCIe 4.0. One thing is for sure, we’ll be testing all games with both PCIe 4.0 and 3.0 with the 6500 XT, and we’ll be using the same quality settings shown in this review.

That’s going to do it for this one. Hope you enjoyed this PCI Express performance investigation and don’t miss our RX 6500 XT review in the coming days.